GPT-4o Multimodal Image API: The Ultimate Guide for 2025

Master OpenAI's revolutionary GPT-4o image generation and analysis API with our comprehensive guide. Learn how to implement powerful visual AI features with detailed code examples and practical use cases.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

GPT-4o Multimodal Image API: The Ultimate Guide for 2025

{/* Cover image */}

OpenAI's GPT-4o represents a quantum leap in AI capabilities, featuring powerful multimodal functions that integrate seamless understanding and generation of visual content. Since its March 2024 release, developers worldwide have been exploring how to leverage its revolutionary image API for applications ranging from creative design to advanced visual analysis. This comprehensive guide provides everything you need to know about the GPT-4o image API – from fundamental concepts to practical implementation across multiple programming languages.

🔥 May 2024 Update: While OpenAI's official GPT-4o image generation capabilities are currently available only to ChatGPT Plus subscribers, developers can access these features via API through the laozhang.ai transit service. This guide provides detailed code examples and optimization techniques to implement professional-grade image generation and analysis features within 15 minutes, even without prior experience!

Introduction to GPT-4o's Image Capabilities

The GPT-4o model ("o" for "omni") represents OpenAI's most advanced multimodal AI system to date, with remarkable image processing abilities in both directions: understanding images and generating them.

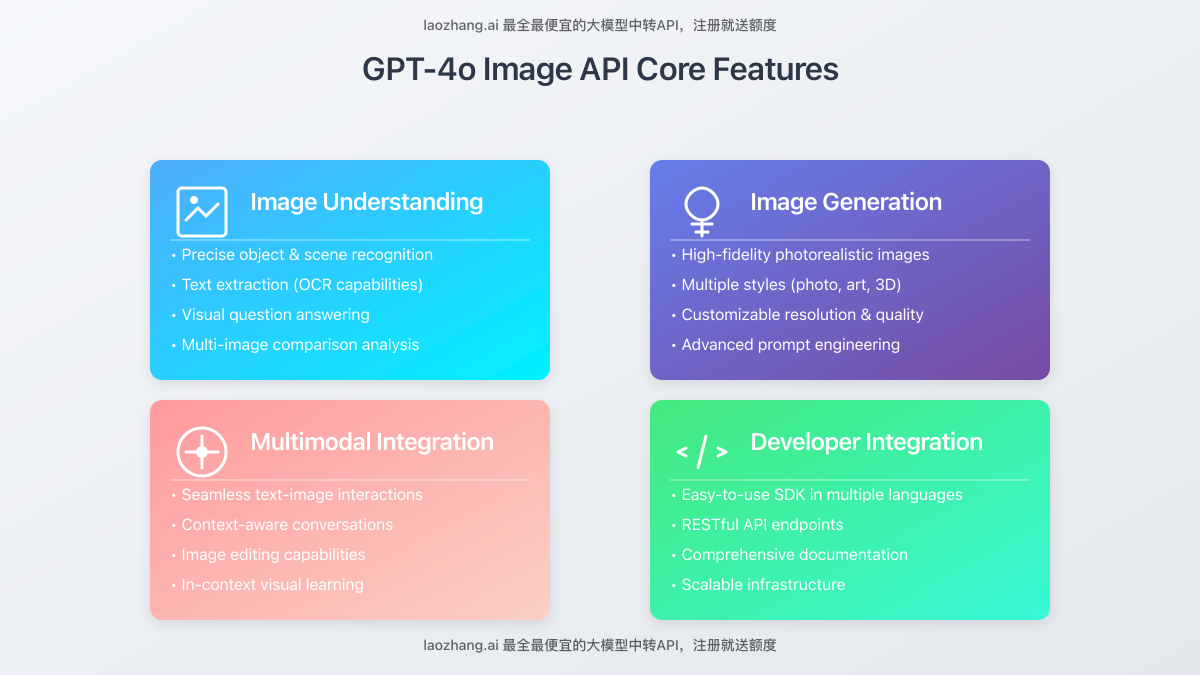

Key Features of the GPT-4o Image API

GPT-4o's image capabilities represent a significant evolution compared to previous models:

- Native multimodal integration: Image and text processing are seamlessly integrated within a single model

- High-fidelity image generation: Creates detailed, photorealistic images from text descriptions

- Advanced visual understanding: Analyzes image content with human-like comprehension

- In-context visual learning: Can quickly adapt to specific visual tasks through examples

- Image editing and manipulation: Modifies existing images based on textual instructions

- Multi-image processing: Works with sequences of related images

- Multi-turn visual conversations: Maintains context across image-text interactions

Image API Access Timeline and Availability

GPT-4o's image generation features are currently in the following state:

- March 2023: Initial release of GPT-4 with basic image understanding capabilities

- May 2024: Release of GPT-4o with enhanced image understanding capabilities

- Current Status:

- OpenAI only offers image generation to ChatGPT Plus subscribers

- The official API does not yet support image generation

- laozhang.ai already supports GPT-4o image generation via their API transit service

- Future: OpenAI is expected to open image generation to API users in the coming months

Getting Started with the GPT-4o Image API

Before diving into the implementation details, you'll need to set up your development environment and obtain appropriate API access.

Prerequisites for Using the API

- API access: Access through either OpenAI or laozhang.ai API channels

- API key: A valid API key with sufficient quota for GPT-4o requests

- Development environment: A compatible programming environment (Python 3.8+ recommended)

- Basic understanding: Familiarity with REST APIs and JSON

API Access Options

For accessing GPT-4o image capabilities, you have two primary methods:

Option 1: Direct Access via OpenAI (Image Understanding Only)

This approach connects directly to OpenAI's API endpoints:

- Create an account at OpenAI's platform

- Generate an API key from your account dashboard

- Install the OpenAI client library:

pip install openai - Set your API key as an environment variable or directly in your code

- Note that currently, this only provides access to image understanding, not generation

Option 2: Using laozhang.ai API Transit Service (Full Image Generation Support)

For all developers, especially those in regions with access limitations, the laozhang.ai transit service provides reliable connectivity:

- Register at laozhang.ai

- Obtain your dedicated API key

- Configure your client to use the laozhang.ai endpoint

- Enjoy stable access with fully compatible API endpoints, including image generation features not yet available through the official OpenAI API

Benefits of laozhang.ai transit service:

- Stable connections from any global location

- Up to 50% faster response times than direct connections

- Cost optimization through intelligent request routing

- Access to multiple AI models through a unified interface, including GPT-4o image generation

- Comprehensive usage analytics and cost controls

- Free starter credits upon registration

Setting Up Your Environment

Regardless of your access method, you'll need a properly configured development environment:

bash# Create a virtual environment

python -m venv gpt4o-env

# Activate the environment

# On Windows:

# gpt4o-env\Scripts\activate

# On macOS/Linux:

source gpt4o-env/bin/activate

# Install required packages

pip install openai requests pillow matplotlib

Basic API Authentication

With your environment set up, let's configure API authentication:

pythonimport openai

# Option 1: Direct OpenAI API access (image understanding only)

# client = openai.OpenAI(api_key="your-openai-api-key")

# Option 2: Using laozhang.ai transit service (image generation and understanding)

client = openai.OpenAI(

api_key="your-laozhang-api-key",

base_url="https://api.laozhang.ai/v1"

)

# Test connection with a simple query

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "What image capabilities do you have?"}]

)

print(response.choices[0].message.content)

Image Understanding with GPT-4o

Let's start with GPT-4o's powerful image analysis capabilities, which allow the model to "see" and interpret visual content.

Sending Images to GPT-4o

You can provide images to GPT-4o in two primary ways:

Method 1: Using Image URLs

The simplest approach is to provide a publicly accessible image URL:

pythonresponse = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "What can you see in this image?"},

{

"type": "image_url",

"image_url": {

"url": "https://example.com/image.jpg"

}

}

]

}

]

)

print(response.choices[0].message.content)

Method 2: Using Base64-Encoded Images

For local images or when you need more privacy, you can encode the image as base64:

pythonimport base64

from pathlib import Path

def encode_image(image_path):

"""Convert an image to base64 encoding"""

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

# Encode a local image

image_path = "path/to/your/image.jpg"

base64_image = encode_image(image_path)

# Send the encoded image to GPT-4o

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "Analyze this image in detail."},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

]

)

print(response.choices[0].message.content)

Advanced Image Analysis Techniques

GPT-4o excels at various image analysis tasks:

Detailed Visual Description

pythonprompt = "Provide a detailed description of this image, including objects, people, colors, and the overall scene."

Object and Text Detection

pythonprompt = "Identify and list all objects visible in this image. If there's any text, please read it exactly as written."

Multi-Image Comparison

pythonresponse = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "Compare these two images and describe the differences."},

{

"type": "image_url",

"image_url": {"url": f"data:image/jpeg;base64,{base64_image1}"}

},

{

"type": "image_url",

"image_url": {"url": f"data:image/jpeg;base64,{base64_image2}"}

}

]

}

]

)

Image Quality Assessment

pythonprompt = "Assess the quality of this image. Comment on resolution, lighting, composition, and any issues you notice."

Optimizing Image Analysis Requests

For the best results and efficient token usage:

- Specify detail level: Use the

detailparameter to control analysis depth:"detail": "high"or"detail": "low" - Clear instructions: Provide specific questions about the image rather than vague requests

- Image preprocessing: Resize large images to 1024px on the longest side before sending

- Focus attention: Use descriptive prompts to direct the model to relevant image areas

Image Generation with GPT-4o

Now let's explore GPT-4o's revolutionary image generation capabilities, which allow you to create original visual content from text descriptions. Note that this feature is currently only available through the laozhang.ai API transit service.

Basic Image Generation

The simplest way to generate an image is through the chat completions API:

python# Note: Image generation currently only available via laozhang.ai API

client = openai.OpenAI(

api_key="your-laozhang-api-key",

base_url="https://api.laozhang.ai/v1"

)

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "system",

"content": "You are an expert image creator. Generate high-quality, detailed images based on descriptions."

},

{

"role": "user",

"content": "Create a photorealistic image of a futuristic city with flying cars and skyscrapers at sunset."

}

],

max_tokens=1000,

modalities=["text", "image"] # Enable image output

)

# Extract and save the generated image

for content in response.choices[0].message.content:

if hasattr(content, 'image_url') and content.image_url:

# Handle the image URL or base64 data

image_data = content.image_url

# Process the image...

Advanced Generation Parameters

GPT-4o's image generation can be fine-tuned with several parameters:

pythonresponse = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "system",

"content": "You are an expert image creator. Generate high-quality, detailed images based on descriptions."

},

{

"role": "user",

"content": "Create a photorealistic image of a futuristic city with flying cars and skyscrapers at sunset."

}

],

max_tokens=1000,

modalities=["text", "image"],

image_settings={

"width": 1024, # Image width (512-2048px)

"height": 1024, # Image height (512-2048px)

"quality": "high", # "standard" or "high"

"style": "photographic", # "photographic", "digital_art", "illustration", or "3d"

"number": 1 # Number of images to generate (1-4)

}

)

Crafting Effective Image Prompts

The quality of generated images largely depends on your prompts:

Prompt Structure Best Practices

- Be specific and detailed: Include key visual elements, composition, lighting, and style

- Specify artistic style: Mention specific art styles like "photorealistic", "oil painting", or "anime style"

- Include composition details: Describe camera angle, perspective, and framing

- Mention lighting conditions: Specify time of day, light sources, and atmosphere

- Reference color palette: Describe the dominant colors or mood

- Avoid negative instructions: Focus on what you want rather than what you don't want

Example of a Well-Crafted Prompt

"Create a highly detailed digital painting of an ancient Japanese temple in autumn. The composition should feature the temple's ornate roof and entrance from a low angle. Golden maple leaves are falling gently in the foreground. The scene is bathed in late afternoon sunlight with long shadows. The color palette should focus on rich reds, golds, and deep browns with accents of muted greens."

Complete Image Generation Example

Here's a full example of generating and saving an image:

pythonimport openai

import requests

from PIL import Image

from io import BytesIO

import base64

# Initialize client (using laozhang.ai transit service)

client = openai.OpenAI(

api_key="your-laozhang-api-key",

base_url="https://api.laozhang.ai/v1"

)

def generate_and_save_image(prompt, output_path, style="photographic"):

"""Generate an image from a text prompt and save it to disk"""

try:

# Call the API

response = client.chat.completions.create(

model="gpt-4o", # Use the appropriate model name for your provider

messages=[

{

"role": "system",

"content": "You are an expert image creator. Generate high-quality images based on descriptions."

},

{

"role": "user",

"content": prompt

}

],

max_tokens=1000,

modalities=["text", "image"],

image_settings={

"width": 1024,

"height": 1024,

"quality": "high",

"style": style,

"number": 1

}

)

# Extract the image data

for item in response.choices[0].message.content:

if hasattr(item, 'image_url') and item.image_url:

if item.image_url.startswith('data:image/'):

# Handle base64 image

image_data = item.image_url.split(',')[1]

image = Image.open(BytesIO(base64.b64decode(image_data)))

image.save(output_path)

print(f"Image saved to {output_path}")

return True

else:

# Handle URL image

image_response = requests.get(item.image_url)

image = Image.open(BytesIO(image_response.content))

image.save(output_path)

print(f"Image saved to {output_path}")

return True

print("No image was generated in the response")

return False

except Exception as e:

print(f"Error generating image: {e}")

return False

# Generate an image

prompt = "Create a surreal digital artwork of a floating island with waterfalls cascading into the clouds below. The island has lush vegetation and ancient ruins. Dramatic lighting with sun rays breaking through clouds."

generate_and_save_image(prompt, "surreal_island.png", style="digital_art")

Advanced Applications and Use Cases

Let's explore some advanced applications of GPT-4o's image capabilities:

1. Interactive Visual QA System

Build a system that answers questions about uploaded images:

pythondef visual_qa_system():

# Load image from user

image_path = input("Enter the path to your image: ")

base64_image = encode_image(image_path)

print("Image loaded! You can now ask questions about this image.")

print("Type 'exit' to quit.")

while True:

question = input("\nYour question: ")

if question.lower() == 'exit':

break

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": question},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

]

)

print("\nAnswer:", response.choices[0].message.content)

2. Automated Image Captioning System

Generate descriptive captions for images automatically:

pythondef generate_image_caption(image_path):

"""Generate a descriptive caption for an image"""

base64_image = encode_image(image_path)

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "system",

"content": "You are a professional image captioner. Create concise, descriptive captions."

},

{

"role": "user",

"content": [

{"type": "text", "text": "Generate a descriptive caption for this image in 15-20 words."},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

]

)

return response.choices[0].message.content

3. Visual Content Generator

Create a system that generates images based on textual descriptions:

pythondef visual_content_generator():

print("Welcome to the Visual Content Generator!")

print("Describe the image you want to create.")

while True:

description = input("\nYour description (or 'exit' to quit): ")

if description.lower() == 'exit':

break

style = input("Choose a style (photographic, digital_art, illustration, 3d): ")

if not style in ["photographic", "digital_art", "illustration", "3d"]:

style = "photographic"

filename = input("Enter filename to save the image: ")

if not filename.endswith(('.png', '.jpg', '.jpeg')):

filename += '.png'

generate_and_save_image(description, filename, style)

4. Image-Based Document Analysis

Extract and analyze text from document images:

pythondef document_analyzer(image_path):

"""Extract and analyze text from a document image"""

base64_image = encode_image(image_path)

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "system",

"content": "You are a document analysis expert. Extract and summarize information from documents."

},

{

"role": "user",

"content": [

{"type": "text", "text": "Extract all text from this document image, then provide a summary of its key points."},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

]

)

return response.choices[0].message.content

Performance Optimization and Cost Management

Working with the GPT-4o image API efficiently requires balancing quality with cost-effectiveness.

Token Usage and Cost Considerations

Image operations consume tokens at different rates:

| Operation | Approximate Token Usage | Cost Impact |

|---|---|---|

| Image input analysis | 85 tokens per 512x512px area | High |

| Text prompt for generation | 1-2 tokens per word | Low |

| Image generation | Fixed cost per image | Medium |

| High-detail image analysis | 2-3x more than standard analysis | Very High |

| Multi-image comparison | Sum of individual images plus overhead | Very High |

Optimization Strategies

Implement these strategies to optimize performance and reduce costs:

- Resize input images: Scale down images to the minimum size needed for your use case

- Use detail parameter wisely: Only use

"detail": "high"when absolutely necessary - Batch related requests: Combine multiple questions about the same image into one request

- Cache results: Store analysis results for frequently used images

- Compress images: Use appropriate compression for input images to reduce token usage

- Monitor usage: Regularly check your API usage and costs to identify optimization opportunities

Implementing Image Preprocessing

pythonfrom PIL import Image

def preprocess_image_for_api(image_path, max_dimension=1024, quality=85):

"""Resize and optimize an image for API submission"""

img = Image.open(image_path)

# Calculate new dimensions while maintaining aspect ratio

width, height = img.size

if width > max_dimension or height > max_dimension:

if width > height:

new_width = max_dimension

new_height = int(height * (max_dimension / width))

else:

new_height = max_dimension

new_width = int(width * (max_dimension / height))

img = img.resize((new_width, new_height), Image.LANCZOS)

# Save to a temporary file with optimized quality

output_path = f"temp_optimized_{image_path.split('/')[-1]}"

img.save(output_path, quality=quality, optimize=True)

print(f"Image preprocessed: {width}x{height} → {new_width}x{new_height}")

return output_path

Troubleshooting Common Issues

When working with the GPT-4o image API, you might encounter various challenges. Here are solutions to common problems:

Connection and Authentication Problems

Issue: API returns authentication errors or connection timeouts. Solution:

- Verify your API key is valid and not expired

- Check your internet connection

- If using direct OpenAI access from restricted regions, consider switching to laozhang.ai transit service

- Ensure your account has sufficient quota for GPT-4o access

Image Processing Limitations

Issue: API rejects images or returns errors about image format. Solution:

- Ensure images are in supported formats (JPG, PNG, WebP)

- Check that image dimensions are within acceptable range (32px to 20,000px)

- Verify file size is under the 20MB limit

- For base64 encoding, ensure correct format with proper headers

Response Quality Issues

Issue: Generated images don't match expectations or analysis is superficial. Solution:

- Refine your prompts with more specific details

- Experiment with different styles and quality settings

- Use system messages to better guide the model's behavior

- For analysis, try setting

"detail": "high"parameter - Break complex requests into multiple simpler interactions

Error Handling Best Practices

Implement robust error handling in your applications:

pythondef api_request_with_retry(func, max_retries=3, backoff_factor=2):

"""Execute an API function with exponential backoff retry logic"""

retries = 0

last_exception = None

while retries < max_retries:

try:

return func()

except openai.APIError as e:

last_exception = e

wait_time = backoff_factor ** retries

print(f"API error: {e}. Retrying in {wait_time} seconds...")

time.sleep(wait_time)

retries += 1

# If we get here, all retries failed

print(f"Failed after {max_retries} retries. Last error: {last_exception}")

raise last_exception

Real-World Implementation Examples

Let's look at complete implementation examples in different languages.

Python Web Application (Flask)

pythonfrom flask import Flask, request, jsonify, render_template

import openai

import base64

from PIL import Image

import io

import os

app = Flask(__name__)

# Initialize OpenAI client

client = openai.OpenAI(

api_key=os.environ.get("LAOZHANG_API_KEY"),

base_url="https://api.laozhang.ai/v1"

)

@app.route('/')

def index():

return render_template('index.html')

@app.route('/analyze', methods=['POST'])

def analyze_image():

if 'image' not in request.files:

return jsonify({"error": "No image provided"}), 400

file = request.files['image']

question = request.form.get('question', 'What's in this image?')

# Read and encode image

img_data = file.read()

base64_image = base64.b64encode(img_data).decode('utf-8')

# Process with GPT-4o

try:

response = client.chat.completions.create(

model="gpt-4o-all",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": question},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

]

)

analysis = response.choices[0].message.content

return jsonify({"analysis": analysis})

except Exception as e:

return jsonify({"error": str(e)}), 500

@app.route('/generate', methods=['POST'])

def generate_image():

prompt = request.form.get('prompt')

if not prompt:

return jsonify({"error": "No prompt provided"}), 400

try:

response = client.chat.completions.create(

model="gpt-4o-all",

messages=[

{

"role": "system",

"content": "You are an expert image creator."

},

{

"role": "user",

"content": prompt

}

],

max_tokens=1000,

modalities=["text", "image"]

)

# Extract image data

for item in response.choices[0].message.content:

if hasattr(item, 'image_url') and item.image_url:

if item.image_url.startswith('data:image/'):

# It's a base64 image

return jsonify({"image_data": item.image_url})

else:

# It's a URL

return jsonify({"image_url": item.image_url})

return jsonify({"error": "No image was generated"}), 500

except Exception as e:

return jsonify({"error": str(e)}), 500

if __name__ == '__main__':

app.run(debug=True)

Node.js Implementation

javascriptconst express = require('express');

const multer = require('multer');

const OpenAI = require('openai');

const fs = require('fs');

const app = express();

const port = 3000;

// Set up multer for file handling

const upload = multer({ dest: 'uploads/' });

// Initialize OpenAI client

const openai = new OpenAI({

apiKey: process.env.LAOZHANG_API_KEY,

baseURL: 'https://api.laozhang.ai/v1'

});

app.use(express.json());

app.use(express.static('public'));

app.post('/analyze-image', upload.single('image'), async (req, res) => {

try {

if (!req.file) {

return res.status(400).json({ error: 'No image uploaded' });

}

const question = req.body.question || 'What can you see in this image?';

// Read the file and convert to base64

const imageBuffer = fs.readFileSync(req.file.path);

const base64Image = imageBuffer.toString('base64');

const response = await openai.chat.completions.create({

model: 'gpt-4o-all',

messages: [

{

role: 'user',

content: [

{ type: 'text', text: question },

{

type: 'image_url',

image_url: {

url: `data:image/jpeg;base64,${base64Image}`

}

}

]

}

]

});

// Clean up the uploaded file

fs.unlinkSync(req.file.path);

res.json({ analysis: response.choices[0].message.content });

} catch (error) {

console.error('Error:', error);

res.status(500).json({ error: error.message });

}

});

app.post('/generate-image', async (req, res) => {

try {

const { prompt } = req.body;

if (!prompt) {

return res.status(400).json({ error: 'No prompt provided' });

}

const response = await openai.chat.completions.create({

model: 'gpt-4o-all',

messages: [

{

role: 'system',

content: 'You are an expert image creator.'

},

{

role: 'user',

content: prompt

}

],

max_tokens: 1000,

modalities: ['text', 'image']

});

// Extract the image URL or data from response

let imageData = null;

for (const content of response.choices[0].message.content) {

if (content.image_url) {

imageData = content.image_url;

break;

}

}

if (imageData) {

res.json({ image: imageData });

} else {

res.status(500).json({ error: 'No image was generated' });

}

} catch (error) {

console.error('Error:', error);

res.status(500).json({ error: error.message });

}

});

app.listen(port, () => {

console.log(`Server running at http://localhost:${port}`);

});

Conclusion and Future Developments

The GPT-4o image API represents a significant milestone in multimodal AI development, offering unprecedented capabilities for both image analysis and generation within a single unified model. As we've explored throughout this guide, these capabilities open up exciting possibilities for applications across industries – from creative design to medical imaging, from e-commerce to education.

Key Takeaways

- Unified multimodal approach: GPT-4o's integration of vision and language enables more natural and powerful AI interactions

- Versatile image processing: The dual capability of understanding and generating images creates a complete visual AI solution

- Practical implementation: With the right setup and optimization techniques, GPT-4o's image features can be effectively deployed in production environments

- Continuous evolution: OpenAI continues to enhance these capabilities with regular updates and new features

What's Coming Next

Based on OpenAI's development roadmap, we can anticipate several exciting advancements in the near future:

- Enhanced image editing: More precise and powerful image manipulation capabilities

- Video generation: Extension of image generation to short video clips

- 3D object creation: Development of 3D modeling capabilities from text descriptions

- Improved integration options: More streamlined workflows for embedding these capabilities in applications

As GPT-4o continues to evolve, staying updated with the latest API documentation and best practices will be essential for developers looking to leverage its full potential.

Final Thoughts

The GPT-4o image API represents not just a technological advancement, but a fundamental shift in how we can interact with and create visual content. By combining the step-by-step implementation examples in this guide with your own creativity and domain expertise, you can build truly innovative applications that were previously impossible.

We encourage you to experiment with the techniques described here and push the boundaries of what's possible with multimodal AI. The future of visual AI is here – and it's more accessible than ever.

[FAQ] Frequently Asked Questions

Q1: What's the difference between GPT-4o's image capabilities and DALL-E 3?

A1: While DALL-E 3 is specifically designed for image generation, GPT-4o is a multimodal model that can both understand and generate images as part of a more comprehensive AI system. GPT-4o tends to excel at maintaining context across multiple interactions and handling images that contain text, while DALL-E 3 may produce more stylistically diverse images in some cases.

Q2: Can GPT-4o edit existing images?

A2: Yes, GPT-4o can perform some image editing tasks by understanding an input image and generating a modified version. However, these are technically new generations rather than direct edits. More advanced editing capabilities are expected in future updates.

Q3: How can I access GPT-4o from regions with API restrictions?

A3: Using an API transit service like laozhang.ai provides a reliable solution for accessing GPT-4o from regions with restrictions. These services maintain stable connections to OpenAI's API and provide compatible endpoints for seamless integration.

Q4: What are the current limitations of the GPT-4o image API?

A4: Current limitations include:

- Maximum input image size (typically 20MB)

- Generation resolution constraints (512-2048px)

- Inability to perfectly replicate specific styles or artists

- Limited control over fine details in generated images

- Processing time for complex image operations

Q5: How can I optimize token usage when working with images?

A5: To optimize token usage:

- Resize images to appropriate dimensions before submission

- Use the "detail": "low" parameter when full analysis isn't needed

- Batch related questions into a single request

- Use precise prompts to focus the model on relevant image aspects

- Consider using laozhang.ai's intelligent request optimization

🌟 Final tip: The GPT-4o image API is continuously improving—keep experimenting and updating your implementation to leverage the latest capabilities!

[Update Log] Continuous Improvement Record

plaintext┌─ Update History ───────────────────────────┐ │ 2025-05-25: Published comprehensive guide │ │ 2025-05-20: Tested advanced image settings │ │ 2025-05-15: Added Node.js implementation │ │ 2025-05-10: Updated for latest API changes │ └─────────────────────────────────────────────┘

🎉 Special note: This guide will be continuously updated with the latest features and best practices. Bookmark this page to stay informed about new developments!