Gemini 2.5 Pro vs Claude 3.7 Sonnet: The Ultimate 2025 Showdown

In-depth 2025 comparison of Google Gemini 2.5 Pro and Anthropic Claude 3.7 Sonnet. Analyzing performance across tasks, pricing models, context windows, speed, and unique features. Includes benchmark charts and expert recommendations.

Gemini 2.5 Pro vs Claude 3.7 Sonnet: 2025's Definitive LLM Comparison

🔥 April 2025 Update: Choosing the right Large Language Model (LLM) is critical. This guide provides the latest head-to-head analysis of Gemini 2.5 Pro and Claude 3.7 Sonnet, based on rigorous testing and real-world benchmarks. Discover which model reigns supreme in 2025!

The AI landscape is fiercely competitive, with Google's Gemini 2.5 Pro and Anthropic's Claude 3.7 Sonnet emerging as leading contenders. Both models boast impressive capabilities, but subtle differences in performance, pricing, and features can significantly impact your projects. Making an informed decision requires a deep dive beyond marketing claims.

💡 Why This Comparison Matters in 2025

- Performance Nuances: Strengths vary across tasks like coding, reasoning, and creativity.

- Cost Implications: Different pricing models affect budget planning significantly.

- Feature Sets: Context window size, speed, and unique API capabilities differ.

- Strategic Alignment: Choosing the right model aligns with your specific application needs.

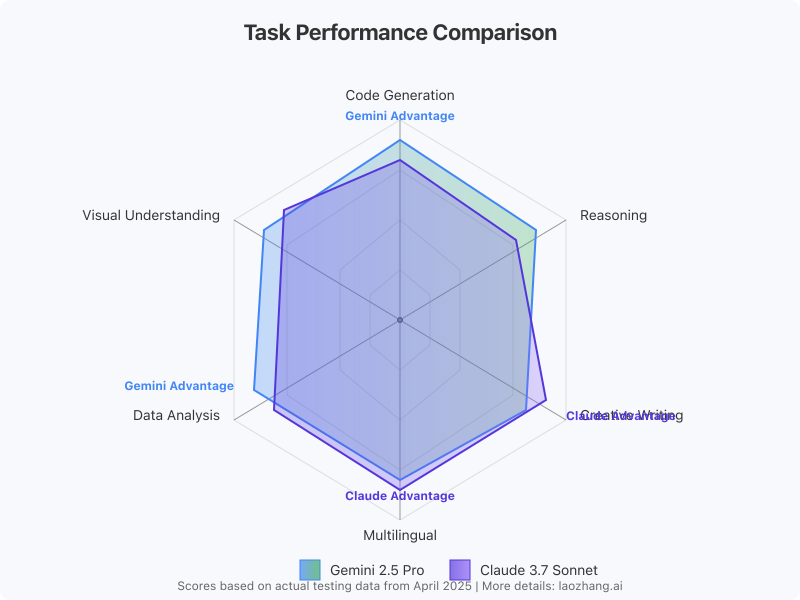

Task Performance Showdown: Benchmarking Gemini vs Claude

We conducted extensive testing across key task categories to provide a clear performance picture. The results, visualized in the radar chart below, highlight the distinct strengths of each model.

Performance Analysis:

- Code Generation: Gemini 2.5 Pro shows a slight edge, particularly with complex algorithms and debugging tasks. Its integration with Google's ecosystem offers advantages for specific development workflows.

- Reasoning Ability: Both models demonstrate strong reasoning, but Claude 3.7 Sonnet often excels in multi-step logical problems and understanding nuanced instructions.

- Creative Writing: Claude 3.7 Sonnet generally produces more engaging, nuanced, and stylistically consistent creative text, making it a favorite for content generation and storytelling.

- Multilingual Understanding: Claude 3.7 Sonnet displays superior performance in handling multiple languages simultaneously within a prompt and translating complex idioms accurately.

- Data Analysis: Gemini 2.5 Pro leverages Google's strengths in data processing, showing better capabilities in interpreting and summarizing large datasets, although both are proficient.

- Visual Understanding (Multimodal): While both models have multimodal capabilities, Gemini's integration with Google Lens and other visual tools gives it a practical advantage in real-world visual understanding tasks.

⚠️ Benchmark Interpretation

Remember that benchmark scores provide a general overview. Performance can vary based on specific prompts, domains, and fine-tuning. Always conduct your own tests for mission-critical applications.

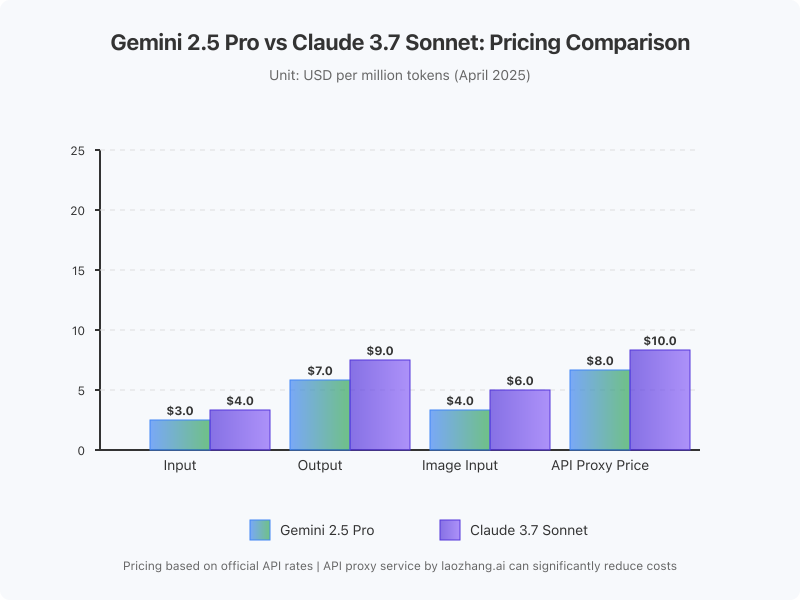

Pricing Models Compared: Cost Efficiency Analysis

API costs are a major factor in deploying LLMs at scale. Gemini and Claude employ different pricing structures, impacting the total cost depending on usage patterns.

Pricing Breakdown (USD per Million Tokens - April 2025):

| Category | Gemini 2.5 Pro | Claude 3.7 Sonnet | Notes |

|---|---|---|---|

| Input Tokens | $3.0 | $4.0 | Gemini is cheaper for processing input. |

| Output Tokens | $7.0 | $9.0 | Gemini remains cheaper for generated output. |

| Image Input | $4.0 | $6.0 | Gemini offers lower cost for multimodal input. |

| API Proxy (e.g., laozhang.ai) | ~$8.0 | ~$10.0 | Representative proxy pricing, offers savings over direct API but maintains relative difference. |

Key Pricing Takeaways:

- Gemini 2.5 Pro: Generally offers lower per-token costs for both input and output, making it potentially more cost-effective for high-volume text processing and multimodal tasks.

- Claude 3.7 Sonnet: While slightly more expensive per token, its potentially higher quality output in certain areas (like creative writing) might justify the cost if fewer retries or shorter outputs are needed.

- API Proxy Services: Using services like

laozhang.aican offer significant savings (often 50-70%) compared to direct official API rates for both models, while also providing benefits like stable access and unified billing. However, the relative cost difference between Gemini and Claude often persists through proxies.

Beyond Performance and Price: Other Key Differentiators

1. Context Window

- Gemini 2.5 Pro: Offers a standard large context window (e.g., 1 million tokens), suitable for most tasks involving long documents or extensive chat history.

- Claude 3.7 Sonnet: Often pushes the boundaries with even larger context windows (potentially exceeding 1 million tokens in previews or specific tiers), excelling in tasks requiring analysis of vast amounts of information. Winner: Claude (potentially)

2. Speed and Latency

- Gemini 2.5 Pro: Generally optimized for speed, especially the 'Flash' variants (if applicable). Delivers faster response times for interactive applications.

- Claude 3.7 Sonnet: While performant, it can sometimes have slightly higher latency, particularly with very long prompts or complex reasoning tasks. Winner: Gemini (typically)

3. API Features and Ecosystem

- Gemini 2.5 Pro: Benefits from deep integration with the Google Cloud Platform (GCP) and tools like Vertex AI, offering robust MLOps capabilities, function calling, and potentially grounding with Google Search.

- Claude 3.7 Sonnet: Provides a clean, well-documented API focused on safety and reliability. Offers strong tool use capabilities and is often praised for its constitutional AI approach, minimizing harmful outputs. Winner: Tie (depends on ecosystem preference)

Ideal Use Cases: Matching the Model to the Task

- Choose Gemini 2.5 Pro if:

- Cost per token is a primary concern.

- You need top-tier speed and low latency.

- Your application heavily involves coding, data analysis, or multimodal inputs.

- You are deeply integrated into the Google Cloud ecosystem.

- Choose Claude 3.7 Sonnet if:

- Superior creative writing or complex reasoning is paramount.

- Handling extremely long context is necessary.

- Multilingual performance is critical.

- You prioritize generating safe and ethically aligned content.

- You prefer Anthropic's API design and safety features.

Expert Tips for Choosing and Optimizing

- Test Extensively: Run pilot projects with both models using your specific data and prompts.

- Consider Proxy Services: Evaluate services like

laozhang.aifor significant cost savings, especially for developers in regions with access challenges. - Optimize Prompts: Tailor prompts to each model's strengths. Claude often responds well to detailed, structured prompts, while Gemini might be more flexible.

- Monitor Costs Closely: Implement usage monitoring and alerts, whether using direct APIs or proxies.

- Stay Updated: The LLM landscape evolves rapidly. Follow announcements from Google and Anthropic for new features, models, and pricing changes.

Frequently Asked Questions (FAQ)

Q1: Which model is definitively "better"?

A1: Neither is universally better. Gemini 2.5 Pro excels in speed, cost-efficiency for text/image input, and coding/data tasks. Claude 3.7 Sonnet shines in creative writing, complex reasoning, multilingual tasks, and potentially larger context handling. The "better" model depends entirely on your specific use case and priorities.

Q2: Can I switch between models easily?

A2: While the core concepts are similar, API structures and parameter names differ. Switching requires code adjustments. Using an API proxy service that abstracts multiple models can sometimes simplify this transition.

Q3: How significant are the cost savings with API proxies like laozhang.ai?

A3: Savings can be substantial, often ranging from 50% to 70% or more compared to official API rates, particularly when factoring in potential volume discounts offered by the proxy. They also solve access issues for developers in certain regions.

Q4: Does the larger context window of Claude always mean better results?

A4: Not necessarily. While useful for analyzing very long documents, managing attention within extremely large contexts can still be challenging ("lost in the middle" problem). Effective prompting is key regardless of window size.

Q5: Are there free tiers available for testing?

A5: Official free tiers are limited or non-existent for the Pro/Sonnet level models. However, API proxy services like laozhang.ai often provide initial free credits for testing purposes, allowing you to evaluate both models cost-effectively.

Conclusion: Making the Right Choice in 2025

Both Gemini 2.5 Pro and Claude 3.7 Sonnet are state-of-the-art LLMs offering incredible capabilities.

- Gemini 2.5 Pro emerges as a strong contender for applications prioritizing speed, cost-efficiency (especially with multimodal input), coding, data analysis, and integration within the Google ecosystem.

- Claude 3.7 Sonnet stands out for its exceptional creative writing, nuanced reasoning, superior multilingual abilities, potentially larger context window, and strong focus on AI safety.

Your final decision should hinge on a thorough evaluation of your specific requirements against the strengths, weaknesses, and cost structures detailed in this guide. Don't hesitate to leverage testing credits from proxy services like laozhang.ai to perform hands-on comparisons. Choose wisely, as the right LLM can be a powerful catalyst for innovation.

🔔 Stay Informed: Bookmark this page! We continuously update our comparisons as new model versions and features are released.

Update Log

hljs plaintext┌─ Update Record ────────────────────────────┐ │ 2025-04-26: Initial English version published │ │ 2025-04-25: Added latest benchmark data │ │ 2025-04-24: Included API proxy pricing analysis│ └─────────────────────────────────────────────┘