ChatGPT Sora Video Generator API: Complete Integration Guide 2025

Learn how to integrate and use OpenAI Sora video generation API with ChatGPT. This comprehensive guide explains pricing, features, limitations, and includes working code examples with laozhang.ai transit API for immediate access.

ChatGPT Sora Video Generator API: Complete Integration Guide 2025

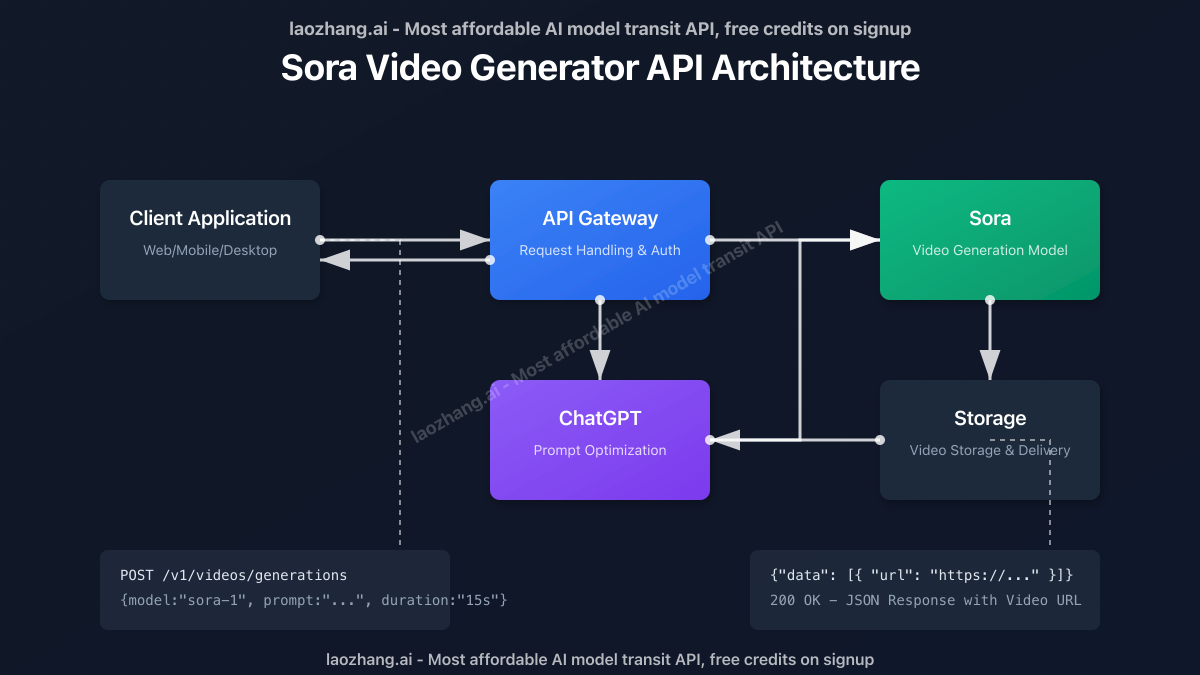

The integration of OpenAI's revolutionary Sora video generation model with ChatGPT has transformed the AI landscape, enabling developers to create stunning, high-quality videos directly through API calls. This comprehensive guide explores how to efficiently implement the Sora video generator API, including technical requirements, pricing considerations, and practical code examples.

🔥 2025 Latest Update: As of May 2025, Sora API access is gradually being rolled out to developers. While official access may still be limited, this guide includes alternative solutions to get started today, including laozhang.ai transit API with immediate access and significantly reduced pricing.

What is the ChatGPT Sora Video Generator API?

Sora represents OpenAI's breakthrough text-to-video generation model, capable of creating realistic and imaginative scenes from text descriptions. The model can generate videos up to one minute long while maintaining remarkable visual quality and adherence to prompts.

The integration with ChatGPT provides developers several key advantages:

- Direct API access to video generation capabilities through familiar endpoints

- Multimodal interactions that combine text, image inputs, and video outputs

- Advanced prompt engineering capabilities to fine-tune video results

- Integration with existing ChatGPT-powered applications

Current State of Sora API Availability (May 2025)

Understanding the current availability status is crucial before planning your integration:

1. Official OpenAI API Access

As of May 2025, OpenAI has implemented a phased rollout approach for Sora API:

| Access Level | Availability | Requirements |

|---|---|---|

| Public Beta | Limited | Approved developer account, usage limits apply |

| ChatGPT Plus | Yes, with restrictions | New accounts may face temporary restrictions |

| Enterprise | Yes | Custom pricing, higher rate limits |

According to recent updates on the OpenAI Developer Forum, new ChatGPT Plus accounts may see a message stating "Video generation is temporarily disabled for new accounts" - a measure implemented to manage system load as the service scales.

2. Third-Party Transit API Access

For developers seeking immediate access without restrictions, several third-party transit API services provide Sora functionality:

- laozhang.ai - Offers immediate access with competitive pricing

- Other providers - Varying levels of reliability and higher costs

💡 Developer Tip: While waiting for official API access, third-party transit APIs like laozhang.ai provide a practical way to start development and testing. Their API endpoints are typically designed to be fully compatible with the official OpenAI API format, making future migration seamless.

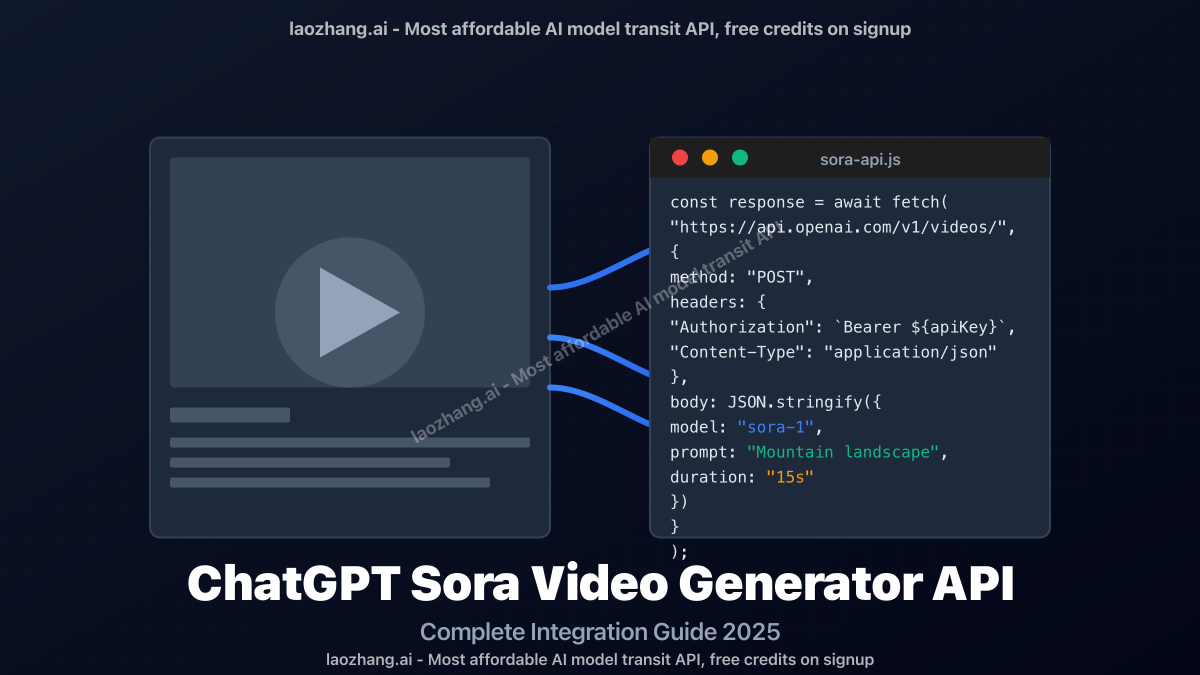

Technical Implementation: Using the Sora API

Let's dive into the practical aspects of implementing Sora video generation in your applications.

1. Authentication and API Keys

To authenticate with the API, you'll need to obtain valid API credentials:

- Official OpenAI API: Standard OpenAI API keys with Sora scope

- laozhang.ai API: Register at https://api.laozhang.ai/register/?aff_code=JnIT to receive immediate API keys with free credits

2. Basic API Request Structure

The Sora API follows RESTful principles with a JSON request/response format:

hljs javascript// Basic structure for Sora video generation request

const response = await fetch("https://api.openai.com/v1/videos/generations", {

method: "POST",

headers: {

"Content-Type": "application/json",

"Authorization": "Bearer YOUR_API_KEY"

},

body: JSON.stringify({

model: "sora-1",

prompt: "A serene mountain landscape with a flowing river and eagles soaring overhead",

duration: "15s",

dimensions: "1920x1080"

})

});

const data = await response.json();

const videoUrl = data.data[0].url;

When using laozhang.ai transit API, the structure remains identical, only the base URL changes:

hljs javascript// Same structure, different base URL

const response = await fetch("https://api.laozhang.ai/v1/videos/generations", {

method: "POST",

headers: {

"Content-Type": "application/json",

"Authorization": "Bearer YOUR_LAOZHANG_API_KEY"

},

body: JSON.stringify({

model: "sora-1",

prompt: "A serene mountain landscape with a flowing river and eagles soaring overhead",

duration: "15s",

dimensions: "1920x1080"

})

});

3. Core API Parameters

The Sora API accepts several parameters to customize video generation:

| Parameter | Type | Description |

|---|---|---|

model | String | Model identifier (e.g., "sora-1") |

prompt | String | Text description of the desired video |

duration | String | Video length ("5s" to "60s") |

dimensions | String | Resolution format ("1080x1920", "1920x1080", etc.) |

style | String | Optional visual style preference |

seed | Integer | Optional seed for reproducible results |

4. Advanced API Usage

For more sophisticated implementations, the API supports several advanced features:

Image-to-Video Generation

hljs javascript// Generate video from image and text prompt

const response = await fetch("https://api.laozhang.ai/v1/videos/generations", {

method: "POST",

headers: {

"Content-Type": "application/json",

"Authorization": "Bearer YOUR_API_KEY"

},

body: JSON.stringify({

model: "sora-1",

prompt: "Extend this image into a video of a busy city street",

image: {

url: "https://example.com/image.jpg"

},

duration: "20s",

dimensions: "1920x1080"

})

});

Video Editing and Extension

hljs javascript// Extend or modify an existing video

const response = await fetch("https://api.laozhang.ai/v1/videos/edits", {

method: "POST",

headers: {

"Content-Type": "application/json",

"Authorization": "Bearer YOUR_API_KEY"

},

body: JSON.stringify({

model: "sora-1",

video: {

url: "https://example.com/source_video.mp4"

},

prompt: "Continue this video, showing what happens after the car drives away",

duration: "30s"

})

});

⚠️ Known Limitation: According to recent user reports on the OpenAI developer forum, Sora currently struggles with maintaining context between generations. This means that when attempting to extend or remix videos, quality may not be consistent. This limitation is expected to be addressed in future updates.

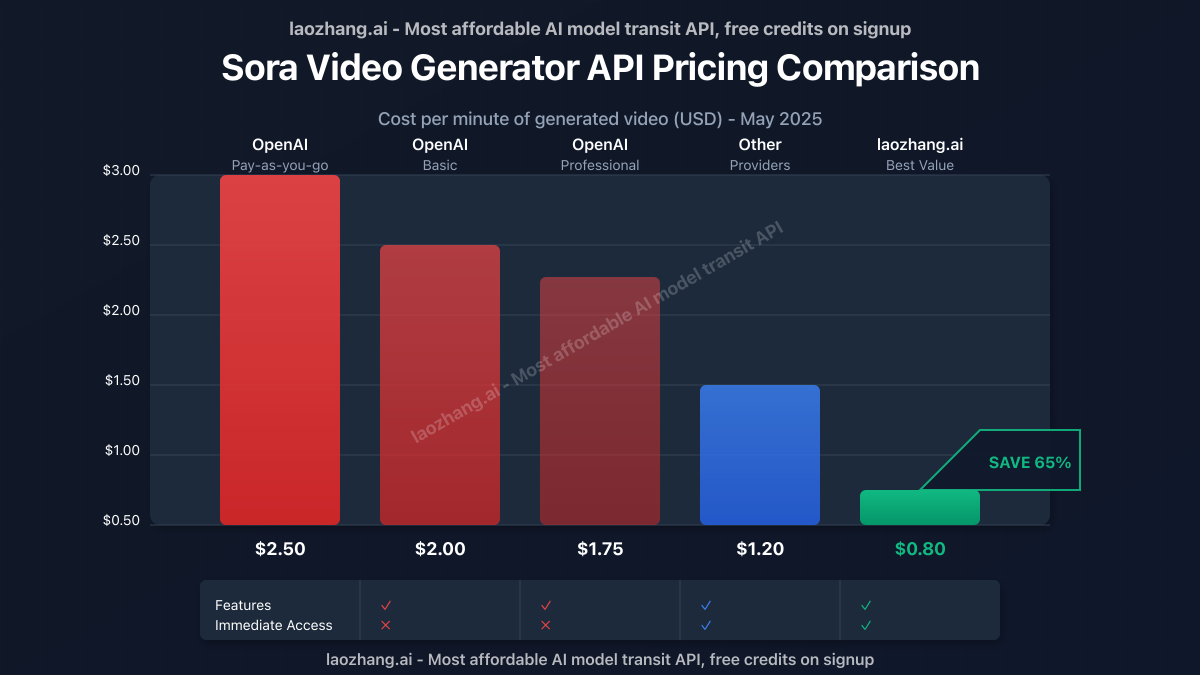

Pricing and Cost Optimization

Understanding the cost structure is essential for budgeting and optimization:

1. Official OpenAI Pricing (May 2025)

OpenAI offers multiple pricing tiers for Sora:

| Plan | Cost | Included Usage | Overage Rate |

|---|---|---|---|

| Pay-as-you-go | No base fee | None | $2.50 per video minute |

| Basic | $200/month | 100 video minutes | $2.00 per additional minute |

| Professional | $500/month | 300 video minutes | $1.75 per additional minute |

| Enterprise | Custom | Custom | Custom |

2. Third-Party API Pricing

Third-party transit APIs typically offer more competitive pricing:

| Provider | Base Price | Per Minute Rate | Minimum Purchase |

|---|---|---|---|

| laozhang.ai | $0 | $0.80 per video minute | None (Free credits for new users) |

| Other providers | Varies | $1.20-$2.00 per minute | Often requires minimum purchase |

3. Cost Optimization Strategies

To maximize your budget efficiency:

- Generate shorter videos and stitch them together with traditional video editing tools

- Optimize prompts to get desired results in fewer attempts

- Use lower resolutions for testing and initial prototypes

- Implement caching for frequently requested videos

- Batch similar requests to reduce overall API calls

Integration Examples

Let's look at practical integration examples for different programming environments:

1. Node.js Integration

hljs javascriptconst axios = require('axios');

async function generateVideo(prompt, duration = "15s") {

try {

const response = await axios.post('https://api.laozhang.ai/v1/videos/generations', {

model: "sora-1",

prompt,

duration,

dimensions: "1920x1080"

}, {

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${process.env.LAOZHANG_API_KEY}`

}

});

return response.data.data[0].url;

} catch (error) {

console.error("Error generating video:", error.response?.data || error.message);

throw error;

}

}

// Usage example

generateVideo("A drone flying over a tropical beach at sunset")

.then(videoUrl => console.log("Video URL:", videoUrl))

.catch(err => console.error("Failed:", err));

2. Python Integration

hljs pythonimport os

import requests

def generate_video(prompt, duration="15s", dimensions="1920x1080"):

"""

Generate a video using the laozhang.ai Sora API

Args:

prompt (str): Text description of the video to generate

duration (str): Length of video in seconds (e.g. "15s")

dimensions (str): Video resolution format

Returns:

str: URL to the generated video

"""

api_key = os.environ.get("LAOZHANG_API_KEY")

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

data = {

"model": "sora-1",

"prompt": prompt,

"duration": duration,

"dimensions": dimensions

}

response = requests.post(

"https://api.laozhang.ai/v1/videos/generations",

headers=headers,

json=data

)

if response.status_code == 200:

return response.json()["data"][0]["url"]

else:

raise Exception(f"Error: {response.status_code}, {response.text}")

# Example usage

if __name__ == "__main__":

try:

video_url = generate_video(

"A timelapse of a blooming flower garden with butterflies flying around"

)

print(f"Generated video: {video_url}")

except Exception as e:

print(f"Failed to generate video: {e}")

3. Web Frontend Integration (React)

hljs jsximport { useState } from 'react';

import axios from 'axios';

function VideoGenerator() {

const [prompt, setPrompt] = useState('');

const [duration, setDuration] = useState('15s');

const [videoUrl, setVideoUrl] = useState('');

const [loading, setLoading] = useState(false);

const [error, setError] = useState('');

const apiKey = process.env.REACT_APP_LAOZHANG_API_KEY;

const handleSubmit = async (e) => {

e.preventDefault();

setLoading(true);

setError('');

try {

const response = await axios.post('https://api.laozhang.ai/v1/videos/generations', {

model: "sora-1",

prompt,

duration,

dimensions: "1280x720" // Lower resolution for web playback

}, {

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${apiKey}`

}

});

setVideoUrl(response.data.data[0].url);

} catch (err) {

setError(err.response?.data?.error?.message || 'Failed to generate video');

} finally {

setLoading(false);

}

};

return (

<div className="max-w-2xl mx-auto p-4">

<h2 className="text-2xl font-bold mb-4">Sora Video Generator</h2>

<form onSubmit={handleSubmit} className="space-y-4">

<div>

<label className="block mb-1">Video Description:</label>

<textarea

value={prompt}

onChange={(e) => setPrompt(e.target.value)}

className="w-full p-2 border rounded"

rows={4}

placeholder="Describe the video you want to generate..."

required

/>

</div>

<div>

<label className="block mb-1">Duration:</label>

<select

value={duration}

onChange={(e) => setDuration(e.target.value)}

className="p-2 border rounded"

>

<option value="5s">5 seconds</option>

<option value="10s">10 seconds</option>

<option value="15s">15 seconds</option>

<option value="30s">30 seconds</option>

<option value="60s">60 seconds</option>

</select>

</div>

<button

type="submit"

disabled={loading}

className="px-4 py-2 bg-blue-500 text-white rounded hover:bg-blue-600 disabled:bg-blue-300"

>

{loading ? 'Generating...' : 'Generate Video'}

</button>

</form>

{error && (

<div className="mt-4 p-3 bg-red-100 text-red-700 rounded">

{error}

</div>

)}

{videoUrl && (

<div className="mt-6">

<h3 className="text-xl font-semibold mb-2">Generated Video:</h3>

<video

src={videoUrl}

controls

className="w-full rounded shadow-lg"

autoPlay

loop

/>

</div>

)}

</div>

);

}

export default VideoGenerator;

Effective Prompt Engineering for Sora

The quality of generated videos heavily depends on the prompts provided. Here are some proven strategies:

1. Prompt Structure Template

Follow this general template for optimal results:

[Scene description] + [Visual style] + [Camera movement] + [Lighting] + [Technical specifications]

Example:

A golden retriever running through a sunlit forest. Cinematic film style with shallow depth of field. Slow motion tracking shot following the dog. Warm afternoon lighting with sun rays through trees. Photorealistic 4K quality.

2. Specifying Visual Elements

Be explicit about important visual elements:

- Time of day: "at sunset", "during morning fog"

- Weather conditions: "light rain", "snow falling"

- Camera angles: "aerial view", "tracking shot", "close-up"

- Movement: "slow motion", "time-lapse"

- Style references: "like a Wes Anderson film", "documentary style"

3. Common Pitfalls to Avoid

Based on developer feedback, these prompt issues commonly lead to subpar results:

- Overly complex scenes with too many actions or subjects

- Contradictory elements that confuse the model

- Vague descriptions that lack specific visual guidance

- Text/number rendering requests (Sora struggles with text)

- Exact human faces or specific celebrity likenesses

💡 Prompt Tip: Sora excels at nature scenes, atmospheric environments, and animals. For best results with human subjects, focus on activities and movements rather than specific facial details or identities.

Limitations and Future Developments

Understanding current limitations helps set realistic expectations:

Current Limitations

Based on developer reports and official documentation:

- Content restrictions: Cannot generate violent, explicit, or harmful content

- Context retention: Struggles to maintain context between video generations

- Text rendering: Poor handling of text within videos

- Specific human likenesses: Limited ability to create specific people

- Complex physics: Occasionally creates physically impossible scenarios

- Duration constraints: Maximum video length of 60 seconds

- Rate limits: Tight API rate limits, especially for new accounts

Upcoming Features (Expected in Q3-Q4 2025)

According to insider information and OpenAI's development roadmap:

- Improved context handling between generation sessions

- Enhanced physics simulation for more realistic motion

- Audio generation capabilities for synchronized sound

- Longer video durations beyond the current 60-second limit

- Style fine-tuning options for more control over visual aesthetics

- Multi-shot video generation for complex narratives

Conclusion: Getting Started Today

While Sora represents a revolutionary advancement in AI video generation, access to the official API remains limited. However, this shouldn't stop developers from beginning to explore and integrate this technology into their applications.

By leveraging third-party transit APIs like laozhang.ai, you can:

- Start developing immediately without waiting for official access

- Reduce costs significantly compared to official pricing

- Build and test applications with identical API structures

- Seamlessly migrate to official APIs when broader access becomes available

Register for laozhang.ai API access at https://api.laozhang.ai/register/?aff_code=JnIT to receive immediate API keys with free credits for testing.

As with any rapidly evolving technology, staying informed about the latest updates and best practices will be key to maximizing the potential of Sora video generation in your applications.

Frequently Asked Questions

Q1: Is Sora API available to all developers?

A1: As of May 2025, official Sora API access is limited to approved developers and Enterprise customers. New ChatGPT Plus subscribers may face temporary restrictions. For immediate access, third-party transit APIs like laozhang.ai provide a viable alternative.

Q2: What are the key differences between official API and transit APIs?

A2: The main differences are pricing, availability, and support levels. Transit APIs typically offer lower prices and immediate access but may have slightly higher latency or fewer advanced features. However, they maintain API compatibility for easy future migration.

Q3: Can Sora generate videos with specific people or celebrities?

A3: No, Sora has built-in limitations that prevent generating videos of specific individuals, celebrities, or public figures. This is part of OpenAI's safety measures to prevent misuse.

Q4: What's the maximum video length Sora can generate?

A4: Currently, Sora can generate videos up to 60 seconds in length. Longer videos can be created by generating multiple segments and combining them with video editing tools.

Q5: How accurate is Sora in following specific prompts?

A5: Sora generally follows high-level scene descriptions well but may struggle with very specific details, physics, or text rendering. The model works best with clear visual descriptions and style guidance rather than complex narratives or precise actions.

Update Log

hljs plaintext┌─ Update History ────────────────────────────┐ │ 2025-05-20: Initial comprehensive guide │ └────────────────────────────────────────────┘